Blogs

The beginning of my jupyter notebook.

URLProxy module (<60 lines of code).

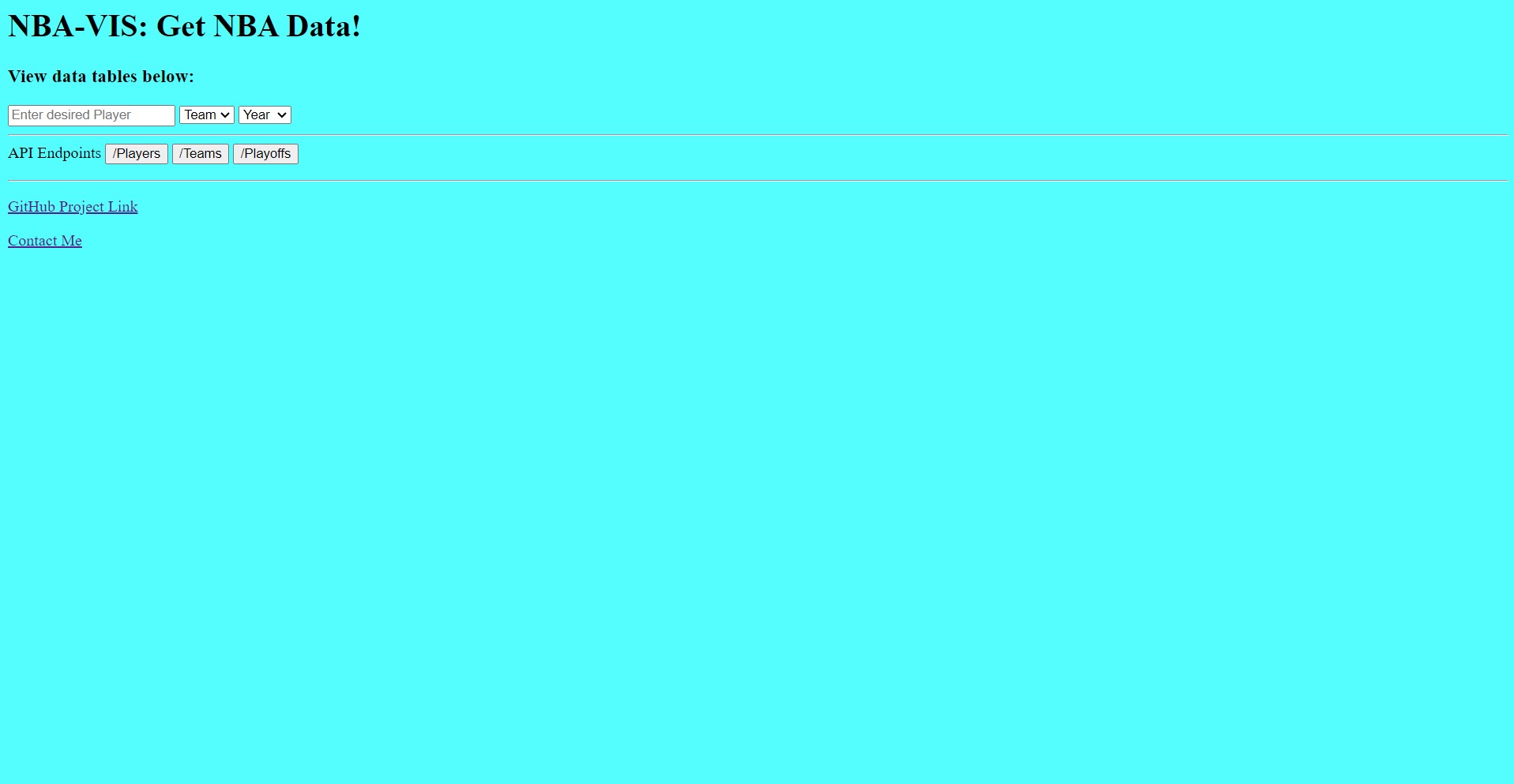

Current iteration of NBA-Vis.

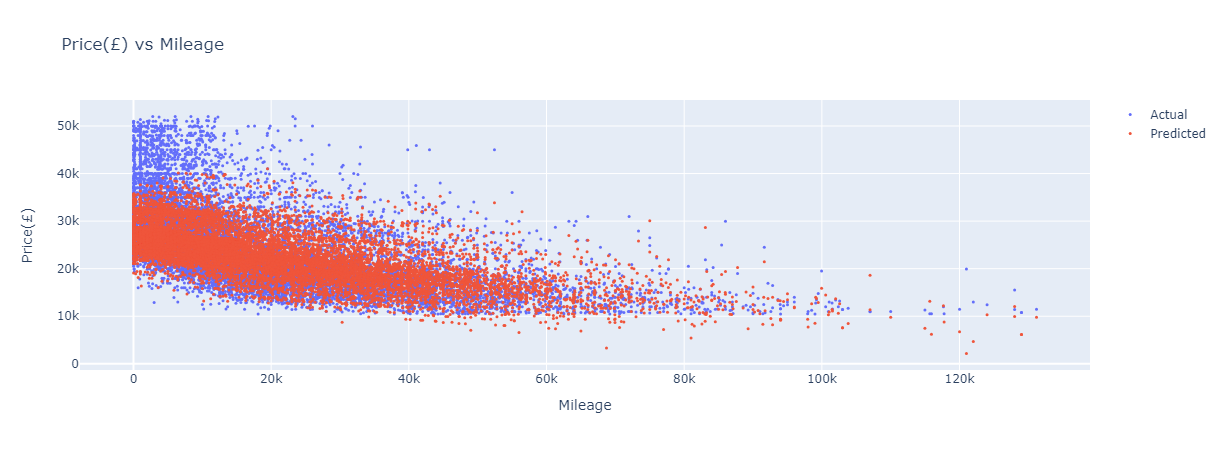

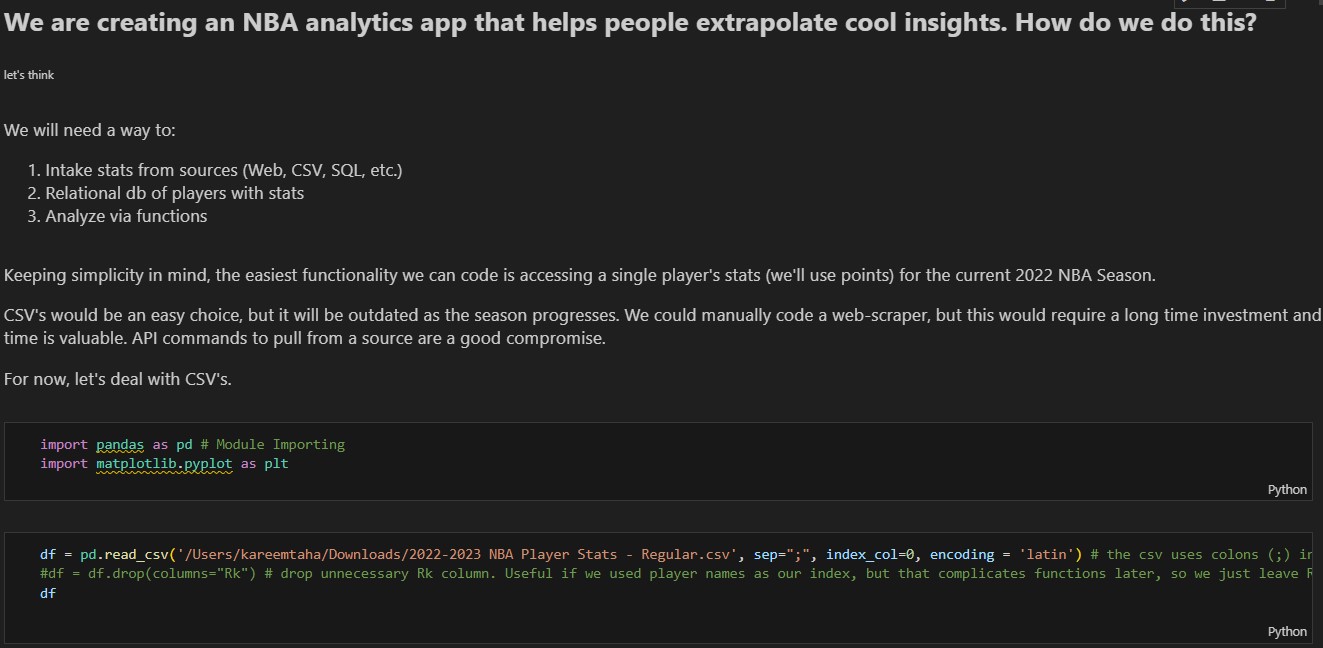

When I began writing NBA-Vis, my intended goal differed greatly from the current revision. This was my first major project (>1 months of coding). My original intention was to create data visualizations for NBA data; I did not plan nor have direction for what the final product would look, I just wanted to produce something tangible. I did not expect to create software libraries, host a webpage, nor a full-fledged database. First I found a data source: a .csv dataset featuring the season averages of all current players.

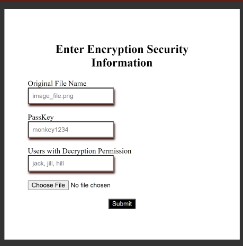

To keep track of my progress, I began development with a jupyter .ipynb notebook. Normally, these are used for lightweight projects to quickly write small code and conduct small analysis. My desire to quickly create a tangible product meant I wanted to rush into making visuals/graphs, which Jupyter is perfect for. Once I wanted more than a single season's average, I began writing code to web-scrape the data from basketball-reference since it had the most comprehensive amount of data. The code was getting very long.

After I developed working functions to extract team/player data, I wanted to save my data. Before databases, there was Pickle. That's right, I used Pickles to store my data. Pickle objects, specifically, which are data files created from the object serialization python library, Pickle. Do not judge, I had not learned about databases and thought an object-oriented data implimentation may be helpful, especially if I chose to create a client-side desktop program (by this point I had still not decided what final product I wanted). However soon, the project was getting so large that I was forced to make design decisions that I did not think of earlier, such as finding a more feasible and scalable database design; I knew SQL but had never hosted a database, so I chose what was easiest and used a local SQLite installation.

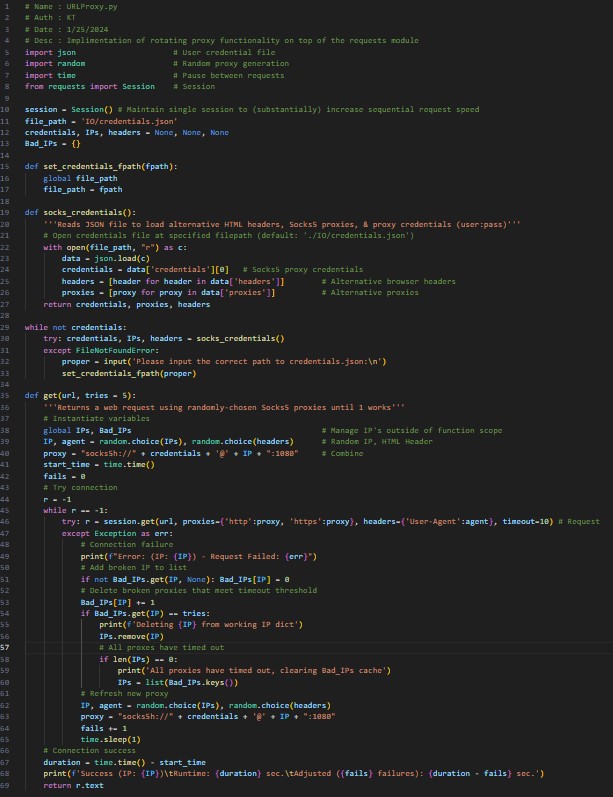

One issue I encountered was that web-scraping basketball-reference proved to be more difficult than I planned. The domain uses rate-limiting to throttle repeated requests from the same client, meaning if I was trying to retrieve lots of data at once (e.g., create a database), the server would reject my connections after a few successful ones. Because I already have a VPN for my entirely legal personal schennanigans, I tried use it to alter my client connection, but this would only temporarily avoid the block: I needed a solid solution.

After seeing a VPN connection could temporarily bypass the block, I knew IP's were logged. My VPN service came with proxy servers that alter your client IP, so I tried plugging that information into the Python 'requests' library to see if that would work without a VPN connection running on my entire machine. Success! But only temporarily. A single proxy would work like a non-proxy connection, it would retrieve a few players data and then the connection would reject. Changing the proxy worked the first few times, but soon even the 1st connection of a new proxy would fail. Something other than IP's must have been logged. Google and StackOverflow suggested changing the browser headers bundled with the connection, something I had seldom familiarity with, however that proved to be the solution I needed. Viola! Add in some for loops to randomize the proxy and browser header meant no two connections would look similar. Kareem 1 - Basketball reference 0.

Soon thereafter, I realized if I wanted to do any solid analytics/visualization work, I would need a robust and comprehensive database that could help me find any insight. More of my time became dedicated to data-engineering, as I needed to think through how to acquire (build db) and retrieve (read db) all necessary data. Would I send SQL queries manually? Build the database as necessary? Have functions with pre-set SQL data retrievals? Utilize an online API? This was the second major design flaw/decision I encountered as a result from poor preparation. I chose to comprehensively build my database, use a robust solution (mySQL) that supports many features, and web-host online to also offer an API. Two major new skills I acquired were Flask and Docker, which I enjoyed learning. These design choices remain in the current iteration of NBA-Vis.

NBA-Vis is my oldest major project. The code, design, and purpose of the program have changed drastically and evolved over time. Starting this project early in my undergrad, I realized it would be beneficial to slow down, not rush development, and wait for myself to acquire new skills necessary to make this a solid product instead of wasting time with Pickles. My recent development has been allocated towards making the web-scraping portion of my code a separate module/library so that others can use it. My first iteration was a very lightweight Python library named 'URLProxy', however I also wanted to develop new skills and improve my software engineering; I spent a few months afterwards writing the same library in C++, mostly to gain experience developing in that language, gaining familiarity with their libraries, cross-platform development, and compilation. NBA-Vis was a great learning experience because I picked up many new tools, but also because of the technical and design challenges that showed me first-hand the importance of proper planning, foresight, and design.